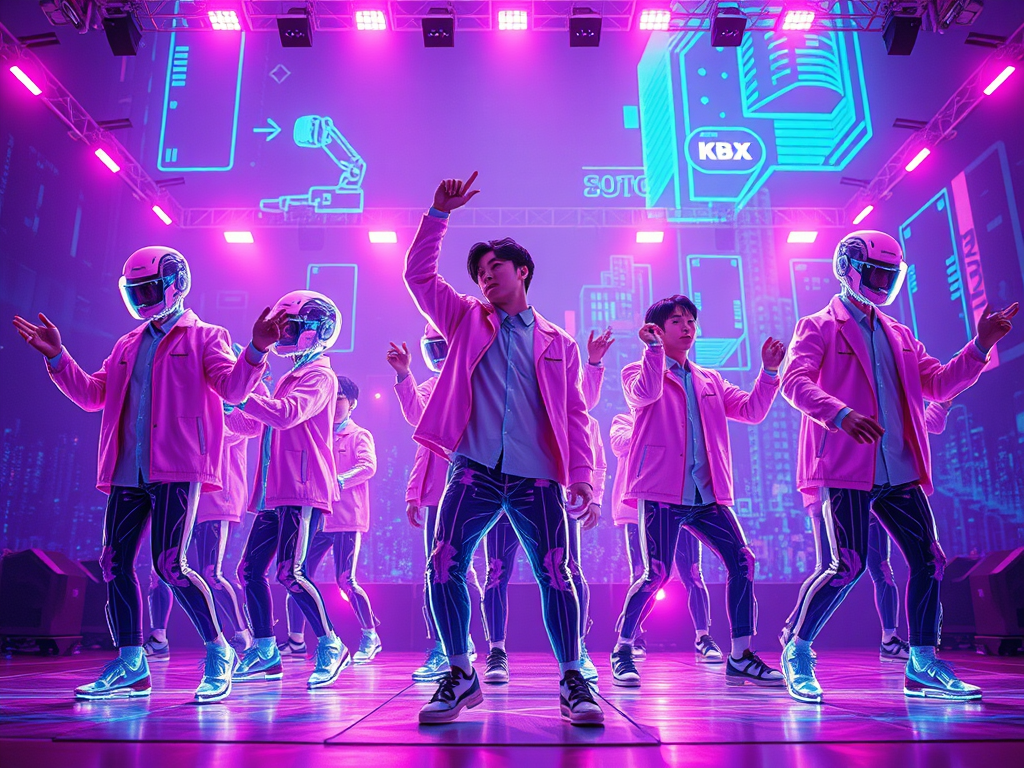

A new investigation by Maldita.es has uncovered a disturbing corner of TikTok where computer-made videos portray girls who look unmistakably underage, styled and posed in ways no child ever should be. These clips aren’t obscure. They’re collecting massive engagement, drawing huge audiences, and quietly funneling viewers toward darker places online.

Here’s the part that should make every adult sit up straight: the comment sections. Researchers found trails leading straight to Telegram chats advertising illegal sexual material involving children. Not hints. Not dog whistles. Direct pathways. And yet, when many of these accounts were reported, most stayed live.

TikTok says it has iron-clad rules banning this kind of content—no exceptions, no gray areas. On paper, those standards sound reassuring. In practice, the report shows something else. Appeals were rejected with clockwork speed. Human judgment, if it existed, seemed absent.

Carlos Hernández-Echevarría, who led the research, didn’t mince words. This isn’t subtle. This isn’t debatable. It’s obvious, and it’s grotesque. The audience behavior makes that crystal clear—because predators don’t accidentally gather in the same comment threads.

The bigger issue here is the collision of generative AI, attention-driven platforms, and slow enforcement. Tools designed for creativity are being twisted into engines for exploitation, while moderation systems lag behind reality.

For parents, guardians, and adults in general, this is a wake-up call. Not to panic—but to push. Push for accountability. Push for faster removals. Push lawmakers and platforms to treat AI abuse involving children as an emergency, not a policy footnote.

Because when technology moves faster than responsibility, kids pay the price. And that’s something none of us should ever scroll past.

Leave a comment